5 Reasons to Use Protocol Buffers Instead of JSON For Your Next Service

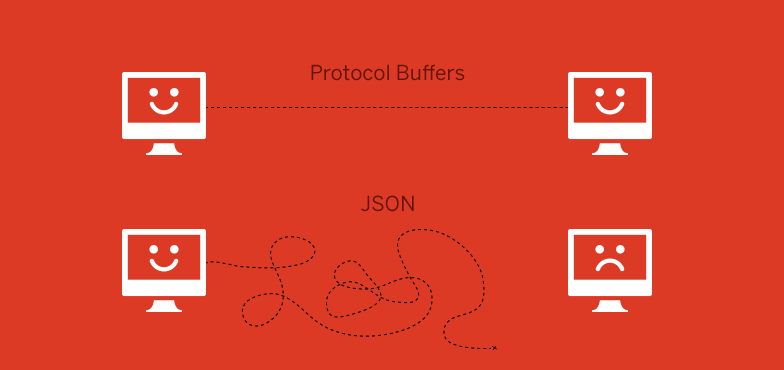

Service-Oriented Architecture has a well-deserved reputation amongst Ruby and Rails developers as a solid approach to easing painful growth by extracting concerns from large applications. These new, smaller services typically still use Rails or Sinatra, and use JSON to communicate over HTTP. Though JSON has many obvious advantages as a data interchange format - it is human readable, well understood, and typically performs well - it also has its issues.

Where browsers and JavaScript are not consuming the data directly – particularly in the case of internal services – it’s my opinion that structured formats, such as Google’s Protocol Buffers, are a better choice than JSON for encoding data. If you’ve never seen Protocol Buffers before, you can check out some more information here, but don’t worry - I’ll give you a brief introduction to using them in Ruby before listing the reasons why you should consider choosing Protocol Buffers over JSON for your next service.

A Brief Introduction to Protocol Buffers

First of all, what are Protocol Buffers? The docs say:

“Protocol Buffers are a way of encoding structured data in an efficient yet extensible format.”

Google developed Protocol Buffers for use in their internal services. It is a binary encoding format that allows you to specify a schema for your data using a specification language, like so:

message Person {

required int32 id = 1;

required string name = 2;

optional string email = 3;

}

You can package messages within namespaces or declare them at the top level as above. The snippet defines the schema for a Person data type that has three fields: id, name, and email. In addition to naming a field, you can provide a type that will determine how the data is encoded and sent over the wire - above we see an int32 type and a string type. Keywords for validation and structure are also provided (required and optional above), and fields are numbered, which aids in backward compatibility, which I’ll cover in more detail below.

The Protocol Buffers specification is implemented in various languages: Java, C, Go, etc. are all supported, and most modern languages have an implementation if you look around. Ruby is no exception and there are a few different Gems that can be used to encode and decode data using Protocol Buffers. What this means is that one spec can be used to transfer data between systems regardless of their implementation language.

For example, installing the ruby-protocol-buffers Ruby Gem installs a binary called ruby-protoc that can be used in combination with the main Protocol Buffers library (brew install protobuf on OSX) to automatically generate stub class files that are used to encode and decode your data for you. Running the binary against the proto file above yields the following Ruby class:

#!/usr/bin/env ruby

# Generated by the protocol buffer compiler. DO NOT EDIT!

require 'protocol_buffers'

# forward declarations

class Person < ::ProtocolBuffers::Message; end

class Person < ::ProtocolBuffers::Message

set_fully_qualified_name "Person"

required :int32, :id, 1

required :string, :name, 2

optional :string, :email, 3

end

As you can see, by providing a schema, we now automatically get a class that can be used to encode and decode messages into Protocol Buffer format (inspect the code of the ProtocolBuffers::Message base class in the Gem for more details). Now that we’ve seen a bit of an overview, let’s dive in to the specifics a bit more as I try to convince you to consider taking a look at Protocol Buffers - here are five reasons to start.

Reason #1: Schemas Are Awesome

There is a certain painful irony to the fact that we carefully craft our data models inside our databases, maintain layers of code to keep these data models in check, and then allow all of that forethought to fly out the window when we want to send that data over the wire to another service. All too often we rely on inconsistent code at the boundaries between our systems that don’t enforce the structural components of our data that are so important. Encoding the semantics of your business objects once, in proto format, is enough to help ensure that the signal doesn’t get lost between applications, and that the boundaries you create enforce your business rules.

Reason #2: Backward Compatibility For Free

Numbered fields in proto definitions obviate the need for version checks which is one of the explicitly stated motivations for the design and implementation of Protocol Buffers. As the developer documentation states, the protocol was designed in part to avoid “ugly code” like this for checking protocol versions:

if (version == 3) {

...

} else if (version > 4) {

if (version == 5) {

...

}

...

}

With numbered fields, you never have to change the behavior of code going forward to maintain backward compatibility with older versions. As the documentation states, once Protocol Buffers were introduced:

“New fields could be easily introduced, and intermediate servers that didn’t need to inspect the data could simply parse it and pass through the data without needing to know about all the fields.”

Having deployed multiple JSON services that have suffered from problems relating to evolving schemas and backward compatibility, I am now a big believer in how numbered fields can prevent errors and make rolling out new features and services simpler.

Reason #3: Less Boilerplate Code

In addition to explicit version checks and the lack of backward compatibility, JSON endpoints in HTTP based services typically rely on hand-written ad-hoc boilerplate code to handle the encoding and decoding of Ruby objects to and from JSON. Parser and Presenter classes often contain hidden business logic and expose the fragile nature of hand parsing each new data type when a stub class as generated by Protocol Buffers (that you generally never have to touch) can provide much of the same functionality without all of the headaches. As your schema evolves so too will your proto generated classes (once you regenerate them, admittedly), leaving more room for you to focus on the challenges of keeping your application going and building your product.

Reason #4: Validations and Extensibility

The required, optional, and repeated keywords in Protocol Buffers definitions are extremely powerful. They allow you to encode, at the schema level, the shape of your data structure, and the implementation details of how classes work in each language are handled for you. The Ruby protocol_buffers library will raise exceptions, for example, if you try to encode an object instance which does not have the required fields filled in. You can also always change a field from being required to being optional or vice-versa by simply rolling to a new numbered field for that value. Having this kind of flexibility encoded into the semantics of the serialization format is incredibly powerful.

Since you can also embed proto definitions inside others, you can also have generic Request and Response structures which allow for the transport of other data structures over the wire, creating an opportunity for truly flexible and safe data transfer between services. Database systems like Riak use protocol buffers to great effect - I recommend checking out their interface for some inspiration.

Reason #5: Easy Language Interoperability

Because Protocol Buffers are implemented in a variety of languages, they make interoperability between polyglot applications in your architecture that much simpler. If you’re introducing a new service with one in Java or Go, or even communicating with a backend written in Node, or Clojure, or Scala, you simply have to hand the proto file to the code generator written in the target language and you have some nice guarantees about the safety and interoperability between those architectures. The finer points of platform specific data types should be handled for you in the target language implementation, and you can get back to focusing on the hard parts of your problem instead of matching up fields and data types in your ad hoc JSON encoding and decoding schemes.

When Is JSON A Better Fit?

There do remain times when JSON is a better fit than something like Protocol Buffers, including situations where:

- You need or want data to be human readable

- Data from the service is directly consumed by a web browser

- Your server side application is written in JavaScript

- You aren’t prepared to tie the data model to a schema

- You don’t have the bandwidth to add another tool to your arsenal

- The operational burden of running a different kind of network service is too great

And probably lots more. In the end, as always, it’s very important to keep tradeoffs in mind and blindly choosing one technology over another won’t get you anywhere.

Conclusion

Protocol Buffers offer several compelling advantages over JSON for sending data over the wire between internal services. While not a wholesale replacement for JSON, especially for services which are directly consumed by a web browser, Protocol Buffers offers very real advantages not only in the ways outlined above, but also typically in terms of speed of encoding and decoding, size of the data on the wire, and more.

What are some services you could extract from your monolithic application now? Would you choose JSON or Protocol Buffers if you had to do it today? We’d love to hear more about your experiences with either protocol in the comments below - let’s get discussing!